How we made AI work Real proof, real efficiency

Like many others, our AI journey started by chasing the tools. A slick demo would drop. We’d try it. A few people got excited, and then reality set in. Most demos were built for perfect scenarios. Change a single parameter, and the magic collapsed. Fixing the gap often took longer than just doing the work without AI.

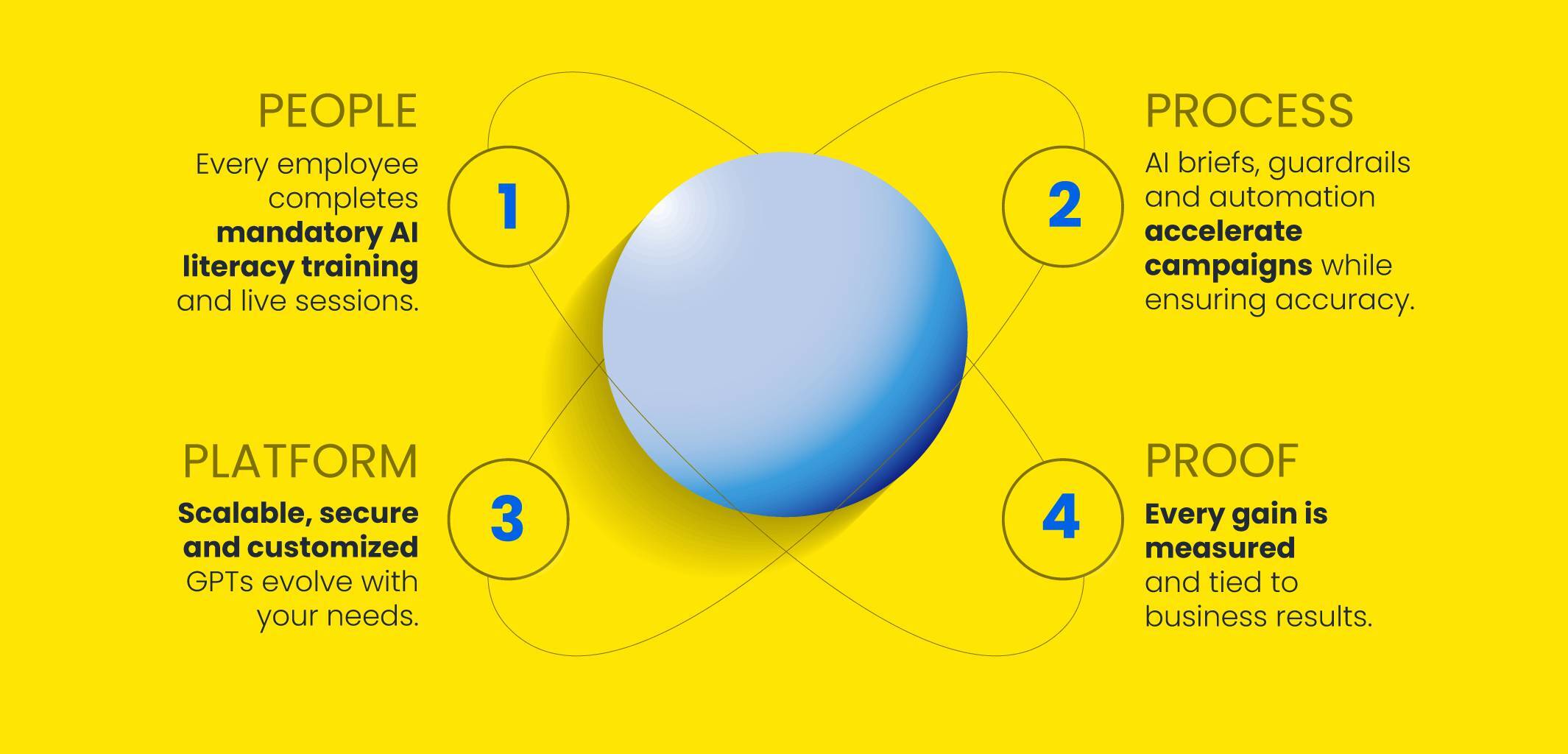

By the end of 2024, I called time out on that game. We didn’t need more tools to chase or more upskilling just to keep pace with demos; we needed an operating system. This is how we made AI work—how people, process, platform and proof became the rails for everyday work—and why our efficiency now shows up in hours saved instead of wasted learning curves.

People first (or nothing sticks)

We started by establishing a baseline of AI literacy. We issued LLM (Large Language Model) licenses to everyone, but gated access. One had to complete core proficiency training and pass a simple assessment to prove comprehension.

After that, we sent weekly, informative newsletters and ran weekly use-case trainings built around practical examples of AI applied directly to our day-to-day work.

The idea was to spread AI like an infection, showing everyone at the agency how remarkable it could be in practice until they caught the excitement bug. It was more an advertising campaign than training.

With this foundation, people gained the literacy to tell which tools were worth investigating and which ones were not. The mandate stayed clear: Protect human judgment and use AI to remove the legwork.

Process is the lever

We started using AI-ready briefs with the essentials—objective, audience, source docs, constraints and required claims. Employees could then use these briefs to give AI the right context, so it could support them better in completing their deliverables.

We also logged the working sessions (auto-transcribed). From there, we merged decisions, citations and edge cases into shared resources so we could catch overlaps and share fixes. Think less tribal knowledge, more company memory.

We also boosted research with AI: AI swept approved sources, compiled citations and flagged contradictions. It then presented a clean stack for a human to scan more content in less time, filter, validate and accept into the project.

We deliberately built processes around the areas with the largest impact, compounding improvements where they mattered most.

Platform: Due diligence over hype

We ran a month’s long, side-by-side evaluation of major LLM providers and a company-hosted model. Multiple roles completed parallel tasks. The result pointed clearly to one LLM. So, we standardized licenses and training there.

For specialized AI tools, we defined the problem first, identified top candidates, then pressure-tested against our data, security and workflow constraints. We picked one, documented the playbook and kept an eye on the field in case something actually beat it. (Spoiler: New and shiny rarely does.)

Guardrails lived in the platform: SSO and role-based access, content provenance, and well-established usage policies. The team felt speed, not churn.

Proof (Or it didn’t happen)

We systematically logged the hours gained or lost by running before-and-after comparisons on the same tasks with the same scope. When a pattern proved out, we repeated it. If a number stalled, we reworked the idea or retired it. It was methodical and evidence-based, not efficiency based on vibes.

A sample of documented efficiency gains

- 59% faster web and marketing: Landing pages (68%), email QA (67%), dashboards (78%), workflows (57%), marketing reports (60%) and assisted code (25%)

- 56% faster creative: Brand checks (82%), visual concepting (67–93%), animation (64%), copy checks (50%), research (50%), drafting (38%) and ideation (25%)

- 40% faster strategy: Market research (44%), audience planning (42%), and insights (33%)

These aren’t estimates; they’re task-level, hours-based deltas against our pre-AI baselines.

While this is amazing and real, I also don’t want to mislead you. They don’t represent entire departments improving at those averages. These are examples of specific workflows where AI created measurable gains, with many other use cases untapped so far.

What this means

Executives don’t need another AI tool. They need an operating system for work.

It starts with upskilling people, so they know how to use it. Then, building processes for it. Use an analytic approach to find the right platform to support this and demand proof that the changes are worth keeping.

Do that, and AI stops being a side project. It becomes the default.

If you’re a CMO or COO, the next move isn’t a demo. It’s authorization: Authorize the investment in training, the redesign of core processes, the selection of a trusted platform and a measurement framework that proves real gains.

That’s the playbook I wrote. It’s not flashy, but it’s proven to work.

About the author

Yas Dalkilic